Section: New Results

Interaction techniques

Participants : Axel Antoine, Géry Casiez, Fanny Chevalier, Stéphane Huot, Sylvain Malacria [correspondent] , Thomas Pietrzak, Sébastien Poulmane, Nicolas Roussel.

Touch interaction with finger identification: which finger(s) for what?

HCI researchers lack low latency and robust systems to support the design and development of interaction techniques that leverage finger identification. We developed a low cost prototype, called WhichFingers (see Fig. 3), using piezo based vibration sensors attached to each finger [29]. By combining the events from an input device with the information from the vibration sensors we demonstrated how to achieve low latency and robust finger identification. We evaluated our prototype in a controlled experiment, showing recognition rates of 98.2% for keyboard typing, and 99.7% for single touch and 94.7% for two simultaneous touches on a touchpad. These results were confirmed in an additional laboratory experiment with ecologically valid tasks.

|

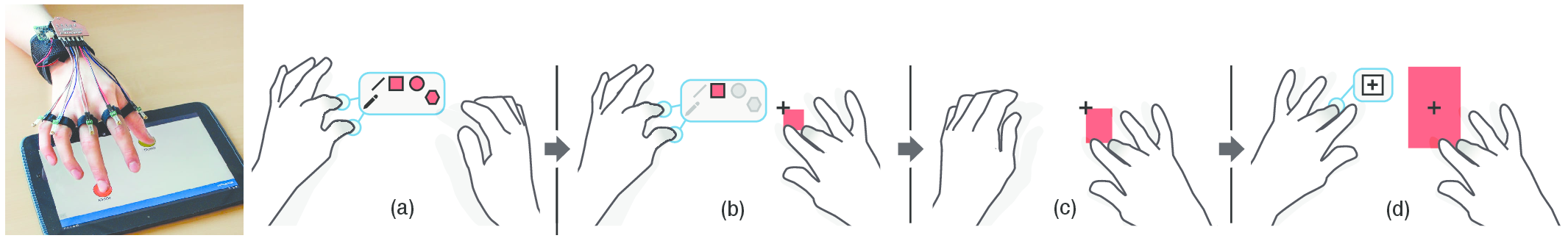

We also explored the large input space made possible by such finger identification technology. FingerCuts for instance is an interaction technique inspired by desktop keyboard shortcuts [14]. It enables integrated command selection and parameter manipulation, it uses feed-forward and feedback to increase discoverability, it is backward-compatible with current touch input techniques, and it is adaptable for different form factors of touch devices: tabletop, tablet, and smartphone. Qualitative and quantitative studies conducted on a tabletop suggest that with some practice, FingerCuts is expressive, easy to use, and increases a sense of continuous interaction flow. Interaction with FingerCuts was found as fast or faster than using a graphical user interface. A theoretical analysis of FingerCuts using the Fingerstroke-Level Model (FLM) [43] matches our quantitative study results, justifying our use of FLM to analyze and estimate the performance for other device form factors.

Force-based autoscroll

Autoscroll, also known as edge-scrolling, is a common interaction technique in graphical interfaces that allows users to scroll a viewport while in dragging mode: once in dragging mode, the user moves the pointer near the viewport's edge to trigger an “automatic” scrolling. In spite of their wide use, existing autoscroll methods suffer from several limitations [39]. First, most autoscroll methods over-rely on the size of the control area, that is, the larger it is, the faster scrolling rate can be. Therefore, the level of control depends on the available distance between the viewport and the edge of the display, which can be limited. This is for example the case with small displays or when the view is maximized. Second, depending on the task, the users' intention can be ambiguous (e.g. dragging and dropping a file is ambiguous as the user's target may be located within the initial viewport or in a different one on the same display). To reduce this ambiguity, the size of the control area is drastically smaller for drag-and-drop operations which consequently also affects scrolling rate control as the user has a limited input area to control the scrolling speed.

We presented a conceptual framework of factors influencing the design of edge-scrolling techniques [12]. We then analyzed 33 different desktop implementations by reverse-engineering their behavior, and demonstrated substantial variance in their design approaches. Results of an interactive survey with 214 participants show that edge-scrolling is widely used and valued, but also that users encounter problems with control and with behavioral inconsistencies. Finally, we reported results of a controlled experiment comparing four different implementations of edge-scrolling, which highlight factors from the design space that contribute to substantial differences in performance, overshooting, and perceived workload.

We also explored how force-sensing input, which is now available on several commercial devices, can be used to overcome the limitations of autoscroll. Indeed, force-sensing is an interesting candidate because: 1) users are often already applying a (relatively soft) force on the input device when using autoscroll and 2) varying force on the input device does not require to move the pointer, thus making it possible to offer control to the user while using a small and consistent control area regardless of the task and the device. We designed ForceEdge, a novel interaction technique mapping the force applied on a trackpad to the autoscrolling rate [18]. We implemented a software interface that can be used to design different transfer functions that map the force to autoscrolling rate and test these mappings for text selection and drag-and-drop tasks. The results of three controlled experiments suggest that it improves over MacOS and iOS systems baselines for top-to-bottom select and move tasks.

Free-space gestural interaction

Exploring at-your-side gestural interaction for ubiquitous environments

Free-space or in-air gestural systems are faced with two major issues: a lack of subtlety due to explicit mid-air arm movements, and the highly effortful nature of such interactions. The lack of subtlety can influence whether gestures are used; specifically, if gestures require large-scale movement, social embarrassment can restrict their use to private contexts. Similarly, large-scale movements are tiring, further limiting gestural input to short-term tasks by physically health users.

We believe that, to promote gestural input, lower-effort and more socially acceptable interaction paradigms are essential. To address this need, we explored at-one’s-side gestural input, where the user gestures with their arm down at their side in order to issue commands to external displays. Within this space, we presented the results of two studies that investigate the use of side-gesture input for interaction [35]. First, we investigated end-user preference through a gesture elicitation study, presented a gesture set, and validated the need for dynamic, diverse, and variable-length gestures. We then explored the feasibility of designing such a gesture recognition system, dubbed WatchTrace, which supports alphanumeric gestures of up to length three with an average accuracy of up to 82%, providing a rich, dynamic, and feasible gestural vocabulary.

Effect of motion-gesture recognizer error pattern on user workload and behavior

In free-space gesture recognition, the system receiving gesture input needs to interpret input as the correct gesture. We measure a system's ability to do this using two measures: precision, or the number of gestures classified correctly versus the number of gestures misclassified; and recall, or the overall number of gestures of a given class that are classified. To promote precision, systems frequently require gestures to be performed more accurately, and systems then reject less careful gesture as input, forcing the user to retry the action. To promote this accuracy, the system sets a threshold, a criterion value, that describes how accurately the gesture must be performed. If we tighten this threshold, we increase the precision of the system, but this, in turn, results in more rejected gestures, negatively impacting recall.

Bi-level thresholding is a motion gesture recognition technique that mediates between precision and recall using two threshold levels: a tighter threshold that limits recognition errors or boost precision, and a looser threshold that promotes higher recall. These two thresholds accomplish this goal by analyzing movements in sequence and treating two less-precise gestures the same as a single more precise gesture, i.e. two near-miss gestures is interpreted the same as a single accurately performed gesture. We explored the effects of bi-level thresholding on the workload and acceptance of end-users [28]. Using a wizard-of-Oz recognizer, we held recognition rates constant and adjusted for fixed versus bi-level thresholding. Given identical recognition rates, we showed that systems using bi-level thresholding result in significant lower workload scores (on the NASA-TLX) and significantly lower accelerometer variance.

Rapid interaction with interface controls in mid-air

Freehand interactions with large displays often rely on a “point & select” paradigm. However, constant hand movement in air for pointer navigation quickly leads to arm and hand fatigue. We introduced summon & select, a new model for freehand interaction where, instead of navigating to the control, the user summons it into focus and then manipulates it [27]. Summon & select solves the problems of constant pointer navigation, need for precise selection, and out-of-bounds gestures that plague point & select. We conducted two studies to evaluate the design and compare it against point & select in a multi-button selection study. Results suggest that summon & select helps performing faster and has less physical and mental demand than point & select.

Using toolbar button icons to communicate keyboard shortcuts

Toolbar buttons are frequently-used widgets for selecting commands. They are designed to occupy little screen real estate, yet they convey a lot of information to users: the icon is directly tied to the meaning of the command, the color of the button informs whether the command is available or not, and the overall shape and shadow effect together afford a point & click interaction to execute the command. Most commands attached to toolbars can also be selected by using an associated keyboard shortcut. Keyboard shortcuts enable users to reach higher performance than selecting a command through pointing and clicking, especially for frequent actions such as repeated “Copy/Paste” operations. However, keyboard shortcuts suffer from a poor accessibility. We proposed a novel perspective on the design of toolbar buttons that aims to increase keyboard shortcut accessibility [25]. IconHK implements this perspective by blending visual cues that convey keyboard shortcut information into toolbar buttons without denaturing the pictorial representation of their command (see Fig. 4). We introduced three design strategies to embed the hotkey, a visual encoding to convey the modifiers, and a magnification factor that determines the blending ratio between the pictogram of the button and the visual representation of the hotkey. Two studies explored the benefits of IconHK for end-users and provided insights from professional designers on its practicality for creating iconsets. Based on these insights, we built a tool to assist designers in applying the IconHK design principles.

|